- - or -

ImageProcessing step operations

Related image processing operations are grouped together into the following categories:

Filtering

Filtering

Filtering operations are used to remove noise, emphasize edges, or calculate the strength of edges. There are 9 simple Finite Impulse Response (FIR), 2 multi-purpose Infinite Impulse Response (IIR) filters, a Rank filter and the UserKernel operation.

The following provides a basic methodology for using filtering operations.

-

Specify the source image. Optionally specify a second source image if the operation requires it.

-

You can specify a Region to only work on a portion of the image.

-

To control the behavior at the boundaries of a region you can enable Overscan to consider neighboring pixels even if they are outside the region.

Sharpening, smoothing,

and edge detection

Sharpening, smoothing,

and edge detection

The typical reason to use a filtering operation is to sharpen or smooth an image, and to detect an edge.

When sharpening an image, especially for 1d bar codes and string reading, you can use the Deriche sharpen. This is done by setting the Operation property to Deriche, and the IIROperation property to Sharpen. This works well where the black to white transitions are not very sharp and range between 2 shades of gray.

When smoothing an image, you can use the Shen smooth. This is done by setting the Operation property to Shen, and the IIROperation property to Smooth. For noise removal, set the Operation property to Smooth.

When trying to detect an edge, you can use Sobel edge detection. This is done by setting the Operation property to EdgeDetect2. You can also set the Operation property to Deriche, and the IIROperation property to LaplacianEdge.

IIR filters

IIR filters

The 2 Infinite Impulse Response (IIR) filters provided are Shen and Deriche. IIR filters consider pixel values over a wider area than the 3X3 kernels, with Shen being more heavily weighted on the nearest pixels. Shen and Deriche are multi-purpose filters that can be used to sharpen, smooth, or accentuate edges, by setting the IIR Operation property. You must also set the IIR Smoothness property to a value from 0-100; the default value is 50.

FIR filters

FIR filters

Finite Impulse Response (FIR) filters perform a convolution using a small rectangular array of values (typically 3X3) with the image. They include Smooth, HorizontalEdge, VerticalEdge, Sharpen, and 2 variations of EdgeDetect and LaplacianEdge; EdgeDetect2 is the Sobel.

The Rank filter orders the pixels selected by the structuring element, and writes out the one in the specified Rank position (1 being the smallest value in the neighborhood, the middle rank gives the median, this is the default, and the largest rank (5, 9 or 25) gives the maximum value).

User-defined

kernels

User-defined

kernels

The UserKernel operation lets you import a custom filter kernel that has been created outside of Matrox Design Assistant using the Matrox Imaging Library (MIL) or with Matrox Inspector. When using MIL, you must save a 2D buffer allocated with the attribute M_KERNEL. Use MbufAlloc2d to allocate the buffer and use MbufSave to save it. See the MIL documentation for more information. When using Matrox Inspector, click on the filter button in the toolbar to open the dialog for image operations on the filter tab. From there, push the button for a new filter, adjust the kernel parameters, and then click on the button that saves the kernel.

Arithmetic

Arithmetic

Arithmetic operations perform the specified point-to-point operation on 2 images, an image and a constant, an image, or a constant.

-

Specify the source image. Optionally specify a second source image if the operation requires it.

-

Specify a Constant if the operation requires it. You can specify the constant in hexadecimal format by prefixing it with 0x, for example 0xff.

-

Specify a region if you want to work on a portion of the image.

-

To prevent overflow on certain operations, you can enable Saturation to limit values that are too small or too big to the minimum and maximum values respectively, instead of wrapping around.

Logic

Logic

Logical operations perform the specified point-to-point operation on 2 images, an image and a constant, or an image.

-

Specify the source image. Optionally specify a second source image if the operation requires it.

-

Specify a Constant if the operation requires it. You can specify the constant in hexadecimal format by prefixing it with 0x, for example 0xff.

-

Specify a region if you want to work on a portion of the image.

Geometric

Geometric

Geometric operations flip, rotate, resize, or translate images.

-

Specify the source image.

-

Specify the Interpolation mode if a smoother approximation is needed. Bilinear is a good choice for most operations. On rotations that are a multiple of 90 degrees, NearestNeighbor might be a bit faster without affecting image quality.

-

When rotating, the image or region will be rotated around its center. If you want to rotate around a different point, you can specify Source center X and Source center Y. If you are rotating around a point far from the center, you might need to specify DestinationCenterX and DestinationCenterY so that the result fits in the image.

-

If you are rotating a region, set Overscan to OverscanEnable to use the adjacent pixels to fill the corners. If you specify OverscanClear, the corner will be cleared to black. OverscanDisable will leave the corner as is.

-

When resizing, to scale the source to fit in an exact size, define a Destination Region and set ScaleFactorX and ScaleFactorX to FillDestination.

-

If you are rotating or resizing an image, you might need to increase the Width and the Height of the OutputImage.

Threshold

Threshold

The threshold operations binarize or clip pixel values to either black, white, or user-specified values based on a threshold condition.

-

Specify the source image.

-

Select the type of threshold operation to perform. You can choose from Simple, TwoLevels, HighPass, BandPass, LowPass, and BandReject.

-

Use the slider to define the required threshold(s). Alternatively, enter a value in the appropriate Low or High threshold text box. You can specify a value in hexadecimal by prefixing it with 0x (for example, 0xff).

Optionally for Simple, HighPass, and LowPass operations, enable Automatic Threshold, which calculates a threshold value automatically. Note that this setting works best on images with distinct bright and dark areas.

For information on the Simple and TwoLevels operations, see the Thresholding subsection of the Procedure for using the BlobAnalysis step section in Chapter 3: BlobAnalysis step.

HighPass, BandPass, LowPass, and BandReject will set a range of pixels to a user-specified value while leaving the rest untouched based on a condition. These modes implement a clipping operation. For example, if your lighting is uneven, you can clip all the pixels above a certain threshold to white.

-

Select HighPass to clamp all values below the specified High threshold to a constant output value, while preserving higher values.

-

Select BandPass to clamp dark pixels to a constant output value, and bright pixels to another constant. Pixel values that fall between the specified Low and High threshold values are left untouched.

-

Select LowPass to clamp all values above the specified Low threshold to a constant output value, while preserving lower values.

-

Select BandReject to force a range of pixel intensity values to a constant output value. Pixel values that fall outside the specified Low and High threshold values are left untouched.

Note that, by default, the low (MinValue) and high (MaxValue) constant output values correspond to output buffer extremes (for example, 0 and 255 for an 8-bit buffer). The middle constant output value (MiddleValue) defaults to the output buffer's middle value (for example, 128). You can specify custom output values.

PolarTransform

PolarTransform

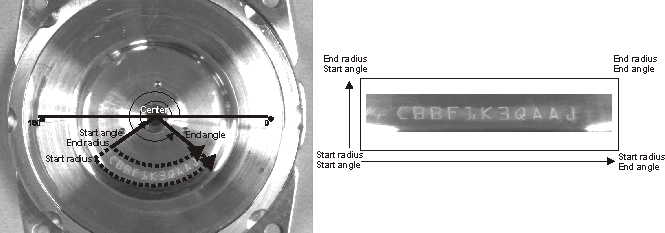

The RectangularToPolar operation unwraps a circular ring or sector of the image into a rectangular image. This might be used to perform character recognition, for example, with the StringReader step (for more information see Chapter 19: StringReader step), on text that is wrapped around a circle.

-

Specify the source image.

-

Define the circular sector in the SourceImage display by clicking on the Specify button. Set the region by drawing a ring sector by clicking on 5 points in the image.

-

The first click is the center. The second and third clicks define the 2 radii. The second click will end up at the bottom of the rectangle and the third click at the top. The 4th and 5th clicks define the start and end angle. The scan will be counter-clockwise from the start angle, but can be changed.

-

-

The Escape key allows you to start over.

-

Alternatively, you can also specify the sector region by specifying the Center, Radius, and Angle values.

-

Specify the sweeping Direction to Clockwise or Counterclockwise.

-

Specify the Interpolation mode if a smoother approximation is needed. NearestNeighbor is the default.

If you are unwrapping a large circle and the outer circumference is longer than the width of the OutputImage, you must increase its Width appropriately.

LutMap

LutMap

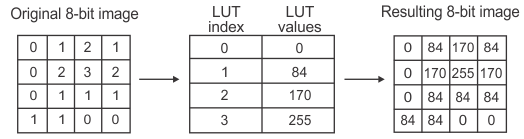

A lookup table mapping (LutMap operation) will replace each pixel value with a precalculated value at the corresponding table index. Using LUTs can easily reduce a multi-step or complex operation to a single-step LUT mapping. LutMap operations include WindowLeveling and UserLut. The following image demonstrates how a LUT is used:

WindowLeveling is a mapping that takes a range of source image values and stretches or compresses them to fit between an output range. To set the Input range and the Output range, you can use the sliders or enter a value or expression. Note that pixel values outside the input range will be mapped to the minimum and maximum values of the output range. WindowLeveling is useful for increasing contrast and for converting data to a different pixel depth (for example, mapping 0-1023 down to 0-255). See the Improving contrast subsection of the Common tasks using the ImageProcessing step section earlier in this chapter for an example on how you can use the WindowLeveling operation.

UserLUT allows you to import the mapping from a file that has been created with the Matrox Imaging Library and has the attribute M_LUT. Alternatively, you can pass an array with LUT values generated from an expression.

The following provides a basic methodology for using the UserLut operation.

-

Specify the source image to map. Specify a region if you want to work on a portion of the image.

-

Select the Import a file option if you are importing a pregenerated LUT from a file. Otherwise, select the Define an array option and use the RANGE or REPEAT functions in the Advanced editor to generate a LUT.

The following example uses the RANGE function to generate a LUT. In this case, the SELECT function then applies a POWER function expression to each element of the LUT to perform gamma correction.

Note that the gamma value is typically between 0.5 and 3.

Copy and Convert

Copy and Convert

The FillOrClear operation can either fill or clear regions with the specified value.

The Copy operation will copy the pixels from the source image region to the destination image region.

The CopyConditional operation implements a masked copy. It will copy the pixels in Source 1 to the output image only if the corresponding pixels specified in Source 2 (mask image) satisfy the specified Condition. By default, it will copy the pixels corresponding to non-zero pixels in Source 2. For example, it can be used to copy only the pixels lying under non-zero mask pixels, where the mask is created as a BlobAnalysis step's output image.

The Convert operation can convert a color image to another color space. For example, RGB can be converted to HSL and HSL can be converted to RGB. For more information, see the Dealing with color images section in Chapter 2: Building a project.

The Convert operation can also convert from RGB and extract a single component. For example, RGB to L, RGB to H, and RGB to Y.

It is not necessary to use an ImageProcessing step to work with Hue or Lightness. There are shortcuts available in the Image Alternate menu.

When using conversions that change the number of bands from 3 to 1 or from 1 to 3, you must explicitly set the number of bands in the output image on the main Configuration pane.

Color

Color

The color category allows you to perform distance and projection operations.

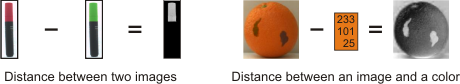

Distance

Distance

You can calculate the point-to-point distance (difference) between 2 images or between an image and a specific color using the Distance operation. Calculating the color distance can be useful to, for example, identify regions of interest or to find flaws.

The following provides a basic methodology for using the Distance operation.

-

Click on the Add button from the Configuration pane and select Distance from the Color category.

-

Specify a 3-band image as the source image (Source 1).

-

Specify whether to compare the source image to another image or to a color.

-

Set the type of distance calculation to Euclidean, Manhattan, or Mahalanobis.

A Euclidean distance type is a standard color distance that is typically sufficient, particularly for RGB. For HSL, you should use a Manhattan distance. A Mahalanobis distance is generally the slowest, though most robust, type of distance calculation and is usually suited for elongated colors. For more information, see the Color distance subsection of the ColorMatcher step advanced concepts section in Chapter 11: ColorMatcher step.

-

Optionally, normalize (remap) distances according to the greatest calculated distance. In certain cases, this can help distinguish the distance between similar colors. For more information, see the Distance normalization subsection of the ColorMatcher step advanced concepts section in Chapter 11: ColorMatcher step.

Projection

Projection

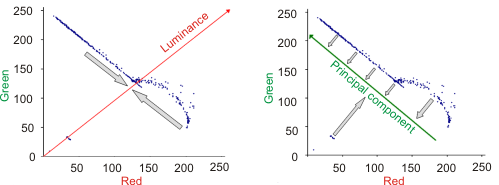

You can optimally convert a color image to grayscale using the Projection operation (principal component projection).

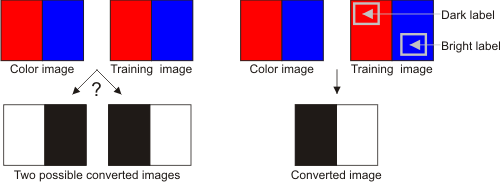

Unlike conversion with Copy and Convert, projection does not extract one color space component. Instead, it uses a training image to perform a principal component analysis (PCA) to calculate the color image's strongest vector (principal component), which is the line, within the color space, representing the greatest degree of color variation. Each color pixel is then projected to a point on this vector, which minimizes the loss of information and results in a grayscale image with a maximum amount of contrast, thereby better differentiating the colors in the original source image.

For optimal results, your color image should contain 2 principal colors. You can achieve good results if your image also contains a mixture of the 2 main colors.

The following provides a basic methodology for using the Projection operation.

-

Click on the Add button from the Configuration pane and select Projection from the Color category.

-

Display the image you want as the training image and click on the Set Training image button in the Project toolbar to set the current image as the training image. Typically, the training image is the same as the color image to convert (source 1). The training image determines the projection that will be applied to the source image, so the projection is not recalculated every time the step runs.

-

Optionally, click on the Mark source pixels button in the Project toolbar to identify the source pixels, in the training image, to use for calculating the principal component projection.

By default, the projection uses the whole training image. However, this can result in a less effective projection since all colors are considered, even those you are not interested in. This can be especially problematic if the image's background, which you typically want to ignore, is predominant.

-

Optionally, click on the Mark bright/dark pixels button in the Project toolbar to identify the pixels, in the training image, that should correspond to the bright/dark pixels in the projection.

Typically, the colors are successfully projected between the brightest (white) and darkest (black) side of the grayscale palette. However, in some cases the projection cannot correctly determine this and the direction of the projection is arbitrary. In this case, you should identify the bright/dark pixels.

-

Optionally, select the pixel range with which to map the projection.

By default, the projection result is mapped according to the dynamic range of all the pixels in the source image, even if you identify the source pixels with which to calculate the principal component. If necessary, you can further improve the conversion to grayscale by mapping the projection according to the dynamic range of only the source image pixels. The result is similar to an increase in contrast.

When projecting with source pixels, the color image pixels that are outside the dynamic pixel range (for example, the background) might be saturated. In this case, the resulting image might not adequately represent the source image.