- - or -

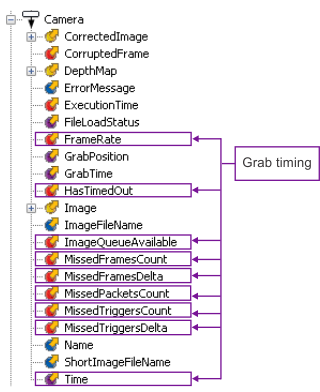

Grab timing

When trying to grab and process multiple images in a short period of time, processing time and the delay imposed by your camera can affect the minimum time between triggers (trigger gap or trigger speed). If the trigger speed is too fast, images that are received while processing is still ongoing could be dropped. However, if the trigger speed is too slow, processing steps might be waiting idly for the next acquired image, even though there is both resources and time to perform the steps.

Image queues can offset the difference between the processing time of an image and the time to acquire an image. To learn whether frames or triggers are being dropped, use the camera outputs related to grab timing.

Queuing images

Queuing images

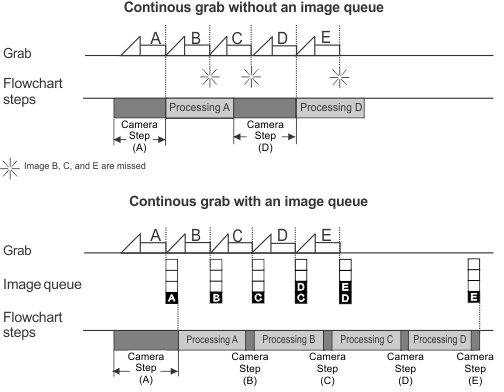

An image queue is a series of image buffers that each stores a single grabbed image. By default, enabling triggered grab mode also enables an image queue for the camera; this means that if a trigger arrives before the previous image has finished processing, this new image is queued (buffered). When in continuous grab mode, the image queue is disabled by default.

When the image queue is enabled and the image queue is filled, frames will be missed until an image is processed and removed from the queue, or the image queue is cleared (see the Stopping the grab subsection of the Changing the acquisition settings at runtime section later in this chapter for more information on clearing the queue). To change the size of the image queue (regardless of the grab mode), use a value for the Image Queue Size input.

Multi-buffering with image

queues

Multi-buffering with image

queues

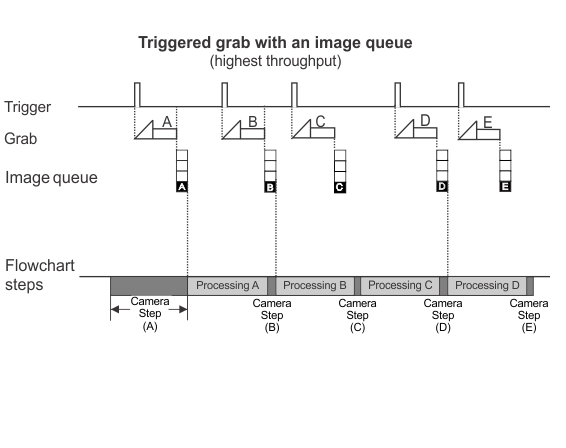

Multi-buffering involves grabbing into one image buffer while processing previously grabbed images that are stored in the image queue. This technique allows you to grab and process images concurrently. Matrox Design Assistant creates an image queue for each camera specified in the Platform Configuration dialog.

In the following image and attached animation, triggers occur at varying intervals, not strictly synchronized with the flowchart or image processing speed; having an image queue allows the runtime platform to grab the next image when the trigger arrives, even while still processing the previously grabbed image.

Note that images in a queue are not replaced with newer images if the queue is not processed quick enough and the queue is full; instead, new images are dropped. Images are only removed from an image queue when the image starts being processed.

If required, change the size of the image queue using the Image Queue Size input, on the PhysicalCameraN page of the Platform Configuration dialog. The default value for Image Queue Size is 4. The maximum queue size is limited by the amount of non-paged memory (DMA) reserved. For more information, see the Memory usage section in Chapter 59: Optimizing your runtime projects.

When dealing with a large number of images that are slow to process, it is possible that the image queue will be full and a new grab will be missed. The total number of missed grabs is accessible from the MissedFrameCount output and MissedFramesDelta output of the Camera step. The MissedFramesCount output is the total number of images missed since the start of the project; while, the MissedFramesDelta output shows only the number of images missed since the last execution of a Camera step.

In situations where it is critical not to miss a grab, use the ImageQueueAvailable output of the Camera step to verify that there are still buffers available and take action when the number of buffers available drops below some application-dependent limit (for example, signal the operator using the operator view).

Once the image queue is enabled, any Camera step will no longer take the next available image from the camera, but will take it from the image queue instead. This maximizes project efficiency.

Under ideal circumstances, the time taken to process each image during runtime would be identical, so you could set the trigger interval to approximately that time. In reality, more complex steps (such as the ModelFinder step or the StringReader step) can take longer to process some images than others, particularly if the part being inspected is badly damaged, missing, or accidentally occluded. Therefore, the average trigger interval should be just longer than the average time required to process an image, and the image queue size should allow the flexibility to accommodate the occasional slow processing cycle.

You can limit some of the search time of a processing step by configuring that step's Timeout input.

Grabbing at

design-time

Grabbing at

design-time

At design-time (or emulation mode), the queueing mechanism is not used. Each time a Camera step is run, it makes a request to the DA Agent process that is part of the runtime environment, asking for the next image. If trigger mode is not enabled, the DA Agent activates a grab. If trigger mode is enabled, the DA Agent initiates a single triggered grab: this means it waits for a trigger after it receives the request and then returns the resulting image to the Camera step. If the trigger signal is not received (for example, because the source of the trigger signal is set to an auxiliary input signal and you are in emulation mode), the DA Agent will wait until the trigger timeout period expires, and then report an error.

In emulation mode, the ImageQueueAvailable output of the Camera step returns the size of the queue.

Continuous grab mode with

image queues

Continuous grab mode with

image queues

When your camera is in continuous grab mode, its image queue is disabled by default because missed frames are typically not of importance when this mode is selected. Once the Camera step is executed, only the most recently grabbed image is passed to the Camera step; any previous unprocessed grabbed images are lost. If required, you can enable the image queue for a camera in continuous grab mode. If so, the process is identical to queuing images when in triggered mode.

Continuous grab mode typically consumes resources unnecessarily and any connected strobe will flash continuously; however, this mode most efficiently processes images when there are no triggers available.

Trigger speed

Trigger speed

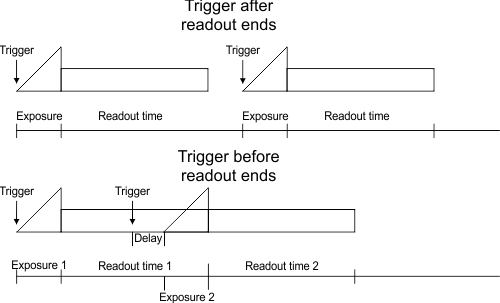

If processing speed is not considered, the maximum speed at which hardware triggers or the software triggers (the Trigger step) can be issued is determined by configurable and fixed constraints. The configurable constraint is the specified exposure time of the camera. The fixed constraint is the sensor readout time, which is fixed based on the camera model and sensor type.

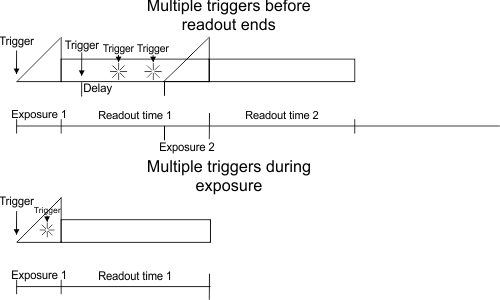

If a trigger is received after the readout of the previous grab, there is no problem. With a supported Matrox smart camera, when a trigger is received before the end of the previous readout, but after the previous exposure, the next exposure will be delayed so that the exposure ends when the previous readout does. That is, in this case, the next exposure overlaps the end of the previous readout. See the image below for a graphical explanation.

In the case where multiple triggers are received before the readout ends, only the first trigger after the exposure will be acknowledged (until the readout completes). All other subsequent triggers will be ignored. Note that, in the case where one or more triggers are received during the exposure of the grab, those triggers are also ignored.

Note that you can also add a delay to a trigger by setting the Delay input on the PhysicalCameraN page of the Platform Configuration dialog. This user-added trigger delay is added to the automatic delay caused by triggers received before the readout ends, when it occurs. The user-added trigger delay is not shown in the above image, for simplicity.

The MissedTriggersCount output stores the total number of missed triggers since the last start of the project, and the MissedTriggersDelta output stores the number of missed triggers since the last execution of a Camera step. Note that missed triggers should not be confused with missed frames due to a full image queue, discussed above. If the value of the TriggerMissedCount output increases, it indicates that triggers are received at a time where they cannot be handled (for example, while exposing the previous triggered image).

For a GigE/USB3 Vision camera, the maximum trigger rate depends on your camera. Verify your camera's specifications and TriggerOverlap feature, if this feature is supported on your camera.

Camera outputs related to

grab timing

Camera outputs related to

grab timing

There are several Camera step outputs that relate to the timing of your grab operation.

The following are outputs relate to the timing of your grab operation:

|

Camera output |

Description |

|

Camera.FrameRate |

Returns the frame rate. |

|

Camera.HasTimedOut |

Returns a yes/no value specifying whether the camera has timed out. |

|

Camera.ImageQueueAvailable |

Returns the number of buffers still available for use in the image queue. |

|

Camera.MissedFramesCount |

Returns the total number of images missed due to a full image queue since the start of the project. |

|

Camera.MissedFrameDelta |

Returns the total number of images missed, due to a full image queue, since the last execution of a Camera step. |

|

Camera.MissedPacketCount |

Returns the total number of packets missed since the start of the project. |

|

Camera.MissedTriggersCount |

Returns the total number of trigger signals that were ignored since the start of the project. A trigger is typically ignored when it arrived while exposing the previous image. In this case, this value is helpful in determining a correct debounce setting for a trigger, or indicate that you are triggering too frequently. |

|

Camera.MissedTriggersDelta |

Returns the total number of trigger signals ignored since the last execution of a Camera step. |

|

Camera.Time |

Returns the timestamp at which the Camera step completes. |

Note: depending on the runtime platform (for example, Matrox Iris GTR), the MissedTriggersCount will include trigger pulses received while the image queue is full.